Rethinking My Relationship With Technology

I’ve been recently struggling with many little questions that have ultimately culminated in one, big, question. It’s been deeply frustrating, because the only answer that I keep coming to is “I don’t know.”

I’ve been reflecting a lot in the last few years on my relationships with technology and the influence that it’s had on my life. I’ve tried to answer questions like “Does having a subreddit for every esoteric interest make life better?” or “How valuable is twitter, actually?” The spirit of these questions is certainly not new, but it seems that I’ve yet to find a satisfying answer. While I originally thought that these questions had some value; the imminent arrival of my son has given these questions a new significance.

These are certainly not new questions. I remember my father musing about “kids these days” when he saw AOL Instant Messenger, email, and text messages dominate my social life; but he’s also an engineer who has seen technology repeatedly improve our lives and society. He raised me to be technologically optimistic. Some of my fondest memories include building lego mindstorms robots together, or building computers together.

Every day questions, like how in the world a car could even be picked up by a magnet were met with an experiment where we constructed an electromagnet just to see how many nails we could pick up. Christmas always brought a new amazing technology that fundamentally changed the way that I viewed the world. I remember the iPod that I received in 2003. That I could fit so much music on it was remarkable! And the user interface was just… stunning. Novel gadgets were coveted because of the hope that they would be good, and they were good. They were always good.

As a naturally curious person, I was taught by example that technology was a force multiplier that allowed use to answer any question imaginable. Indeed, that’s still the case. The internet still provides us with the ability read about any topic that I want. I’ve yet to encounter a question that wasn’t answerable through diligent research or connecting with experts.

So then what’s the problem? We all know. While technology has increased our access to experts, it has also increased our ability to seek out echo chambers and led to further misinformation and political polarization. We have unmatched tools for staying in contact with friends near and far, and yet we’ve never felt more isolated and lonely. We have tools that have been promised to drastically increase our productivity and yet, all they do is interrupt dinner with notifications about messages that don’t matter. Addressing all of these issues in isolation feels difficult given that they’re each a facet of the broader question: How can we take the best of technology while ignoring the worst?

Trying to answer this question has left me to reconsider my relationship with technology and the influence that it has on my life. As I’ve been on this journey, I’ve sought out books, articles, and conversations with close friends but many of these have inspired cynicism towards technology. I feel that this cynicism is myopic because technology has always inspired some form of hope in my life. My life as I know it wouldn’t be possible with it! Working in the technology sector has provided me with my livelihood. I even believe in the technologies that we continue to build. I truly believe that AI, if worked on thoughtfully, can empower untold societal change. I see the possible benefits of the systems that we’re building and I see no reason not to build them, but this is the response of an optimist.

Unfortunately, there is a growing body of literature that shows that many of our modern day technologies are ruining our ability to read, reason, and focus. Technologies such as social networks, streaming platforms, and smartphones provide us with the specifically designed intellectual junk food that allows us to disengage from the world around us. It’s just so easy for me to pick up my phone and scroll through reddit any time I get bored, possible even during conversations with my wife – and for what? So that I can skim through yet another poorly informed comments section filled with people I don’t know intent trying to score karma with dank-memes and hive-mind claims. When stated in such a manner, it’s obvious that this is a poor trade off and yet, I do it. It’d be easy to spend the rest of this ranting about all the ways in which modern day technologies intellectually hijack me, but that’s both boring and unoptimistic. Instead, it’s worth focusing on the distinct benefits that technology provide and how to minimize the downsides.

One of the things that I noticed most frequently was this issue of notification anxiety, where I always felt the need to keep my notification tray empty, which resulted in more than 100 unlocks per day, on average. I’ve found that by keeping my phone on do not disturb for weeks at a time I’ve been able to quell the notification anxiety while also keeping that same notification tray empty. Importantly, I sit down and choose to process emails and that’s the only time I view them. I’ve not yet removed the gmail app from my phone because the searching feature is far too useful, but I’ve found that without the constant notification anxiety, I’ve stopped checking the emails as well. While this felt revelatory at the moment, it’s obvious now. The question then becomes, what is the point of email notifications? Or the amount of email that we send and receive? Do we actually feel any more connected, or do we just end up feeling more distracted? I’m not actually sure.

The attention capturing might be the most severe in the professional world where I am expected to respond to emails, slack messages, instant messages, in person chats, and phone calls. There are days where I do nothing but respond briefly to a range of emails and slacks for 8 hours and then head home, exhausted, and entirely unproductive. Given the types of technologies that I’m building, I certainly don’t have the time to just waste entire days keeping up with all of these communications media. I have math to do, code to write, and models to train. All of those tasks require deep thought, and getting distracted by communications media, my phone, or the news actively prevents me from building the things that I want to build. I have found that by cutting these distractions out, I have been able to more easily keep up with the literature in my field. Because this is my profession, understanding the gory details and nuances of how an algorithm or model develops over time is literally a professional responsibility. I’m not constantly worried about the racist things that Steve King says so there is the mental energy to engage with a new and interesting knowledge graph paper without issue. Is it actually good that I’ve decided to become a less informed citizen? Or have I actually become less informed? Is it even a problem? And what do I want to model for my child?

In my personal life, it’s been easy to experiment with possible actions, but how can I make sure that I’m actually picking the most healthy solutions? Do we even know what the most healthy solutions are? What lessons do I want to teach my son about technology? Is guarded optimism even an option? Given that many of the above observations highlighted on the anxiety introduced by notifications and other frequent technological refocusing actions, how do I help my son avoid falling victim to these same traps? Is it even a problem that he could be or am I making a mountain out of a mole-hill?

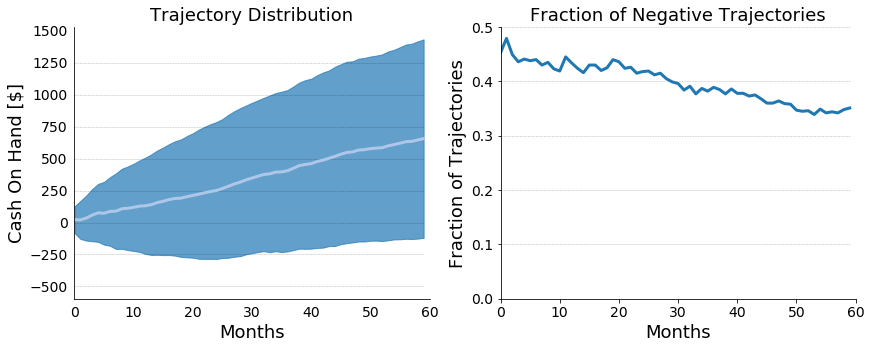

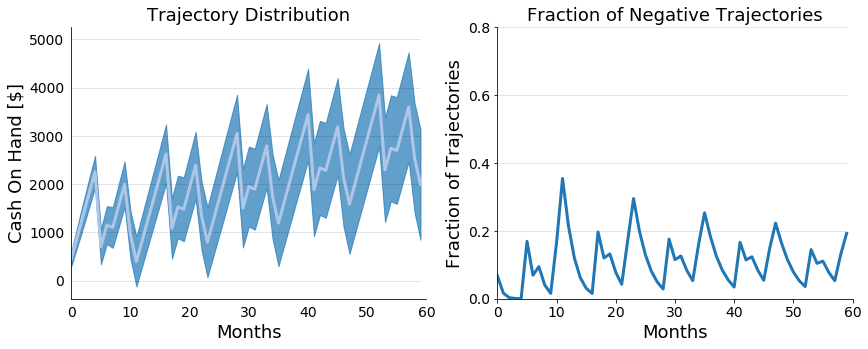

Furthermore, I’m broadly part of the problem given the industry that I work in. If after deep examination of my life and my relationship with technology, I’ve resolved to drastically cut back on my technological interactions, my question is: how can I take these personal, introspective, learnings and generalize them to be professionally responsible? It’s certainly plausible that sectors such as ad-tech, algorithmic targeting, and gameification should be avoided. I’ve been able to avoid such projects thus far, but what about Forge.AI and the fintech/news processing technologies that we’re building? What are their downstream ramifications?

What do we as a profession owe the people who have used our work product? Is an apology sufficient? Is it even warranted? Are we as a profession just responding to consumer desires? We can attempt this argument, but we’ve all dog-fooded our own systems. I know many people in tech that refuse to own smartphones for the reasons identified above, and yet they still make these technologies. Are they just assuming that the end user is educated enough to make the right decision? Is that really ever the case?

Do we as a community even care, or is this only a luxury afforded to those who are already able to support their families? And who do I mean by we anyway? It feels like I’m maybe using we to avoid blaming myself; but maybe it’s acknowledgment that these problems are bigger than myself? Maybe.

So what are we to do? What am I to do? I’ll probably continue to think about this, but continue to disarm how uncomfortable this conversation can be my making jokes. I’ll tell myself that it’s likely that by attempting to think through this from time to time, and talking about it over drinks with friends, I’ll be doing my part. Maybe I will be, or maybe it’s all just a day late and a dollar sort. Maybe.